Letta’s 7+ years in AI research redefines AI Agent stacks, from Gemini 2.0 benchmarks to ethical tools like Clio, transforming industries globally #90b

Welcome to 390,000 global readers about AI, Future Trends & Digital Transformation!

Letta's 7+ Years of AI Agent Stack

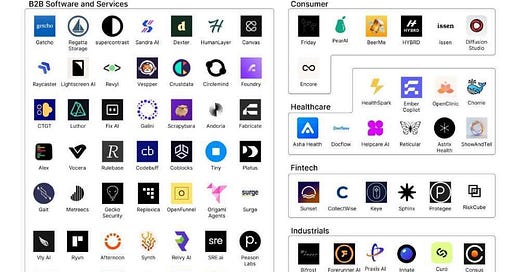

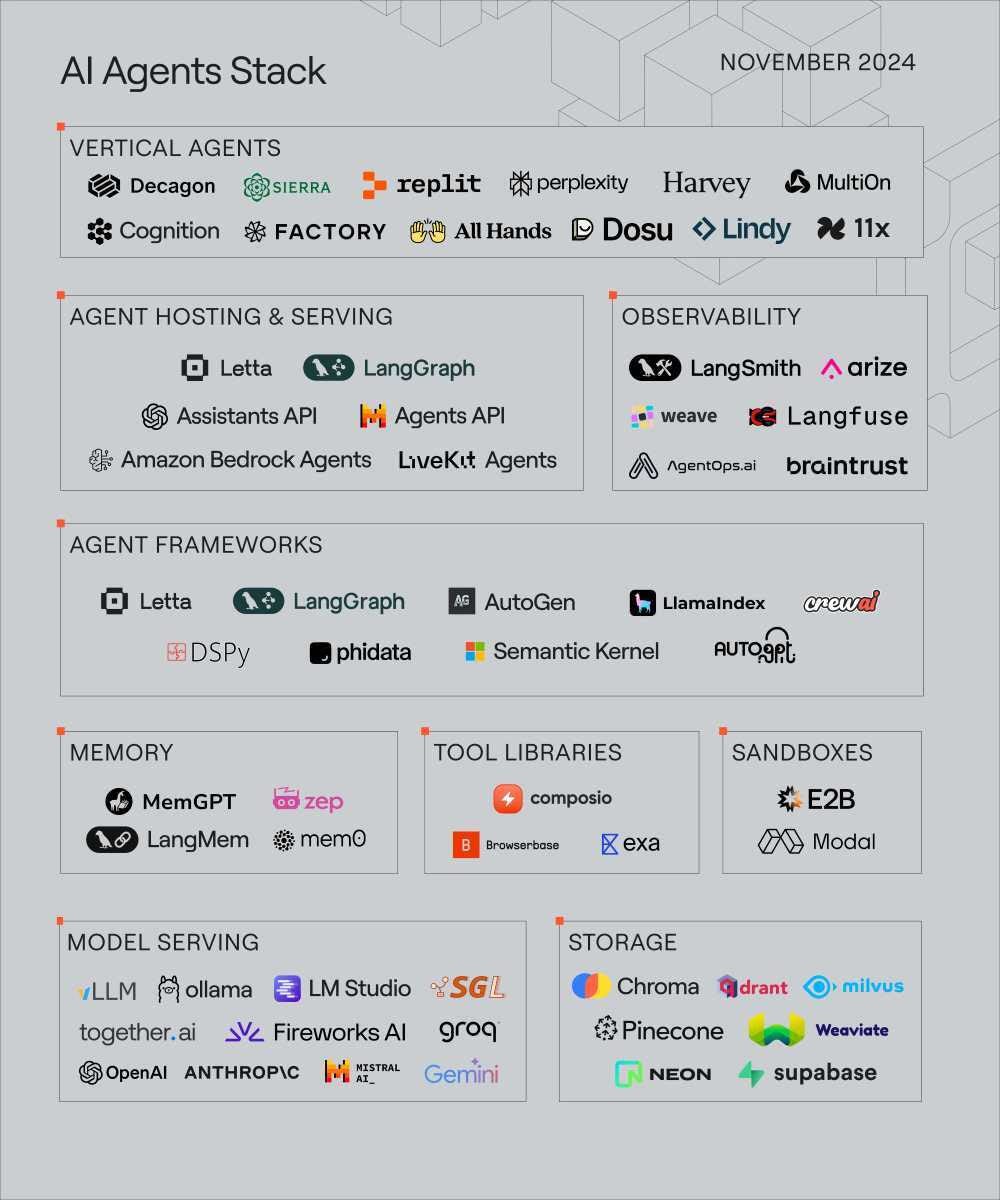

based on their experience working with open-source AI for over a year and undertaking AI research for over 7 years, Letta reimagined the AI Agent stack to fit what developers use.

A LLM processes requests at the heart of an AI agent. These models are served via APIs (OpenAI, Anthropic) or local deployments (vLLM).

Agents must store conversation histories and recollections. Chroma, Pinecone, and Postgres are popular vector and conventional databases.

Tools and libraries: AI agents can use external functions. Agents call web browsers and search tools when needed. Instead of executing the tool, the agent outputs which tool to contact and what data to send.

LangChain and Letta manage agent state, memory, and context. They manage how agents store and use prior data and communicate with other agents and technologies.

Hosting & Serving: Most frameworks function within a script or notebook, but the future will require installing agents as API-accessible services like LLMs.

Why important? AI agents are young yet growing fast. More powerful frameworks and deployment choices could make AI agents more accessible and effective in numerous industries.

GT-4o and Claude-3.6 Sonnet are defeated by Gemini 2.0 Flash.

Keep reading with a 7-day free trial

Subscribe to DIGITAL STORM weekly to keep reading this post and get 7 days of free access to the full post archives.