AI is getting faster and cheaper—Grok 3 slashes costs, Nvidia boosts speed 25x, and GPT-4.5 trades accuracy for price. #100b

Welcome to >470,000+ global readers about AI, Future Trends & Digital Transformation!

LLMs Are Becoming Smarter and More Affordable

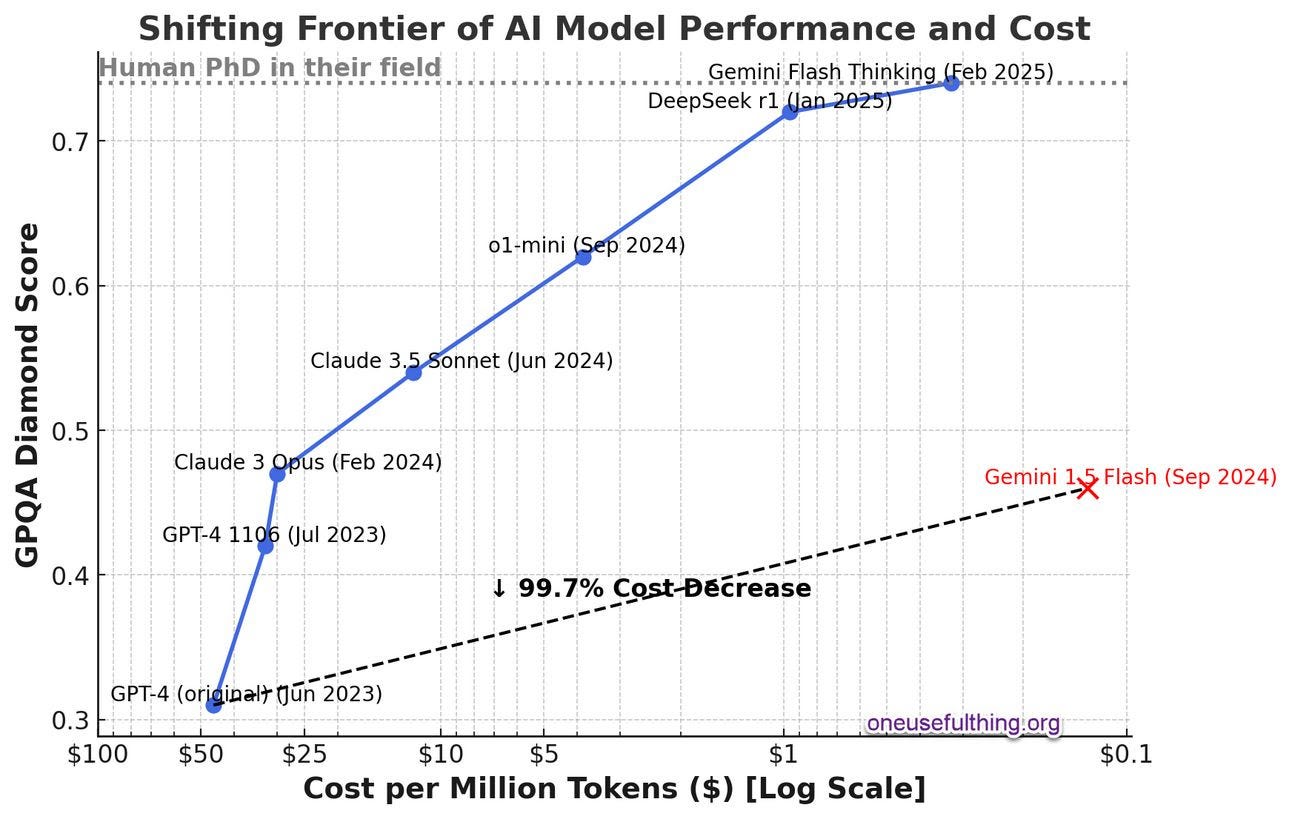

Recent advancements in large language models (LLMs) are delivering enhanced performance at significantly reduced costs.

Enhanced Intelligence: Models like Grok 3 have been trained with unprecedented computational power, utilizing over 10²⁶ FLOPs, leading to superior reasoning and problem-solving capabilities.

Cost Reduction: Google's Gemini 1.5 Flash has seen substantial price cuts, with input costs reduced by 78% to $0.075 per million tokens and output costs by 71% to $0.30 per million tokens for prompts under 128K tokens. docsbot.ai+4developers.googleblog.com+4masterconcept.ai+4

Why important? The combination of increased computational resources and competitive innovation is driving LLMs to become both more powerful and cost-effective, making advanced AI accessible to a broader audience.

Thought-Provoking Question: As LLMs become more advanced and affordable, how will this democratization of AI impact industries and society at large?

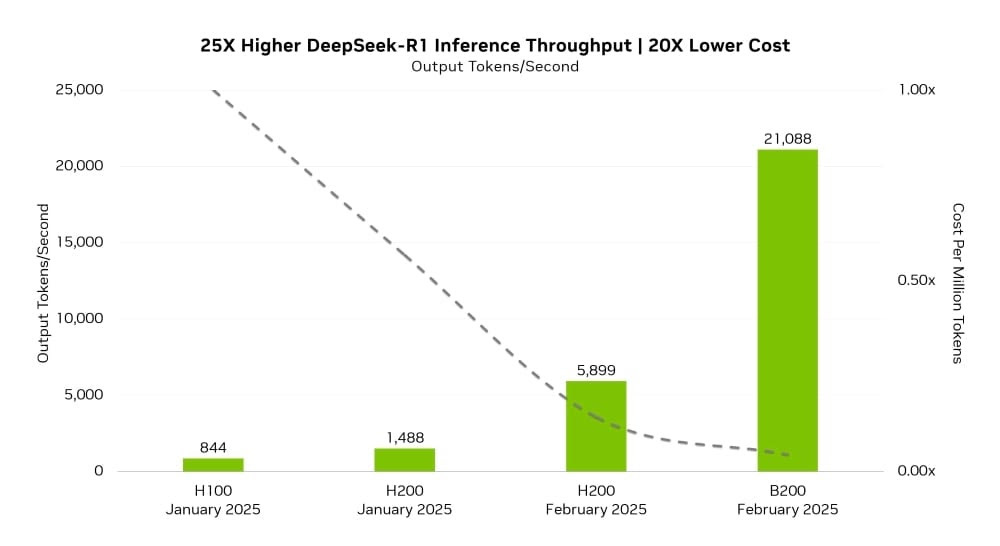

Nvidia and DeepSeek Partner to Supercharge AI Performance

Nvidia and DeepSeek have collaborated to optimize the DeepSeek-R1 model for Nvidia's Blackwell architecture, achieving a 25x increase in revenue and a 20x reduction in cost per token compared to the previous H100 GPU. x.com+2threads.net+2m.facebook.com+2

Enhanced Performance: The Blackwell B200 GPUs process 21,088 tokens per second, a significant leap from the H100's 844 tokens per second. pcguide.com

Efficient Precision: Utilizing FP4 precision, the B200 maintains 99.8% accuracy of FP8 formats while consuming less power.

Cost Savings: Operational expenses have decreased substantially, making AI deployments more profitable.

Why important?

Wanna read further? Subscribe….

Keep reading with a 7-day free trial

Subscribe to DIGITAL STORM weekly to keep reading this post and get 7 days of free access to the full post archives.